Yesterday (March 24th, 2016), Microsoft launched Tay, an

artificial intelligent chat bot developed by Microsoft’s Technology and Research and Bing teams to experiment with and conduct research on conversational understanding. Tay is designed to engage and entertain people where they connect with each other online through casual and playful conversation. The more you chat with Tay the smarter she gets, so the experience can be more personalized for you.

Source: https://www.tay.ai, retrieved 25th March 2016

What happened is that, in less than 16 hours of interacting with Twitter users, the bot went from being fairly nice and pleasant, to being plain silly and, later, sexist, racist and downright offensive. You can see examples here, here, here and here.

The project has now been put on hold, and some of the most offensive tweets deleted.

This episode reminded me of Jaron Lanier’s take on the true meaning of the Turing test.

The Turing test, designed by computer scientist Alan Turing (who called it ‘The Imitation Game’) is a mechanism to assess a machine’s ability to imitate human behaviour to the point where it is indistinguishable to that of a human being. A machine that can fool an assessor into believing that he or she is talking with another human being, is deemed to be of high quality.

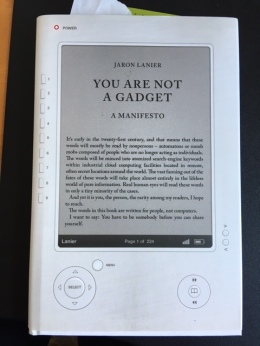

However, in the book ‘You are not a gadget – A manifesto’, Lanier argues that the test says more about human standards than the state of technology. In page 32, Lanier writes:

However, in the book ‘You are not a gadget – A manifesto’, Lanier argues that the test says more about human standards than the state of technology. In page 32, Lanier writes:

(T)he Turing test cuts both ways. You can’t tell if a machine has gotten smarter or if you’ve just lowered your own standards of intelligence to such a degree that the machine seems smart. If you can have a conversation with a simulated person presented by an AI program, can you tell how far you’ve let your sense of personhood degrade in order to make the illusion work for you?

People degrade themselves in order to make machines seem smart all the time. Before the crash, bankers believed in supposedly intelligent algorithms that could calculate credit risks before making bad loans. (…)

Did that search engine really know what you want, or are you playing along, lowering your standards to make it seem clever?

Given that Tay reacted to, and learned from, interactions with Twitter users, I would say that Tay’s debacle actually says a lot about evolving (lowering) human standards. Maybe Tay would pass the Turing test, after all.

Did you follow Tay’s story? What do you make of it?

Whoa! I just caught the headline today but I didn’t go in to read much about it. Now that I know what happened, I was quite shocked. I guess AI still has great room for improvement. I’m more surprised that the team didn’t run more thorough tests before put Tay online.

LikeLike

True. They could also have blocked (or at least, monitor closely) certain topics or keywords.

LikeLike

I didn’t follow this at all. Saw some headlines, of course, but didn’t really pay attention until reading your post just now. Now, seeing this from a distance, and with the benefit of having read your post, I’m not so sure Tay was broken. It seemed she learned very quickly to speak in a very human way. Unfortunately, it was not the most beautiful side of humanity we got to see. What would be interesting now, is to investigate which human networks she tapped into to learn from. I think there is a whole lot of research potential behind it, which could, for example, show how people are influenced to vote for or follow a radical leader. It could also maybe show, how people radicalise. Could be a very interesting result of this test.

LikeLike

Yes, I agree with you. Since the objective was to learn from interacting with Twitters users… it learned very quickly.

You raise a very good point though, about studying radicalisation. Could be interesting to look and frequency vs. language?! Very interesting, indeed.

LikeLike