AI is becoming a common part of online shopping, but are there times when we still prefer human judgement?

Research suggests that the answer depends on both the nature of the product and the customer’s certainty about what they want.

Some time ago, I reviewed a paper by Fei Jin and Xiaodan Zhang examining when customers would accept an AI recommendation vs a human one.

Jin and Zhang found that customers are more willing to accept an AI-recommendation when they focus on the material or tangible aspects of a product. In contrast, when the recommendation focuses on experiential aspects, customers tend to prefer it from a human.

I have now read a paper by Shili Chen, Xiaolin Li, Kecheng Liu and Xuesong Wang which found the same effect. However, whereas Jin and Zhang posited that the observed preferences had to do with the perceived competencies of AI vs human agents, Chen and colleagues found that the preference was (partly) shaped by customers’ certainty regarding the purchase: that is, the extent to which the customer knew exactly what they want.

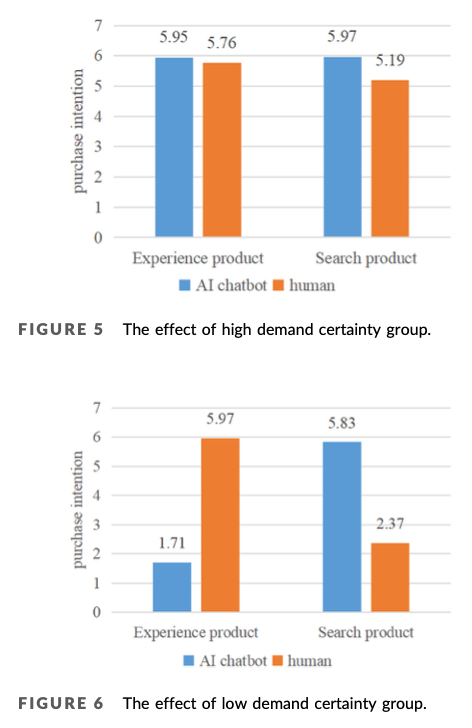

Specifically, Chen and colleagues found that for search products (i.e., high in tangible attributes), AI chatbots are always preferred to humans. However, for experiential products, customers only preferred humans in the case of low demand certainty. If demand certainty was high, the preference disappears.

These findings deepen our understanding of when AI recommendations are acceptable to consumers, and why. It’s not simply about what’s being recommended, but also how confident we are as customers. When certainty is low and the product experience matters, human judgement still carries weight. But when we know what we want, or when decisions are based on tangible features, AI may well be the preferred advisor.

The details for this paper are: Chen, S., X. Li, K. Liu, and X. Wang (2023). Chatbot or Human? The Impact of Online Customer Service on Consumers’ Purchase Intentions. Psychology & Marketing 40(11). 2186–2200.

When do you find AI recommendations helpful?