As chatbots become an integral part of customer service, mistakes remain inevitable. Whether it’s a failure to understand the customer’s intent or an error in executing an order, such failures raise a key question: Who do customers blame— themselves or the company?

Blame attribution shapes customers’ subsequent behaviour. For instance, if I think that I received the wrong pizza because the lighting was poor where I was and I probably clicked on the wrong option, I will tell myself that, next time, I need to make sure that I have proper lighting or, maybe, use an alternative means of ordering the pizza. However, if I think that it was the brand’s fault, because they are using a sub-standard AI solution, then I will demand a refund and an apology, and may even post a negative review.

Considering the ubiquity of AI Chatbot technology and its high likelihood of failure, it is really important for businesses to understand who customers blame and why. Thus, Daniela Castillo set out to investigate these questions, with myself and Emanuel Said.

The approach

The first step was to conduct in-depth interviews with customers to understand their experiences of using chatbots, and what kind of experiences left them satisfied vs frustrated. The interviews highlighted that customers really value their ability to decide when to interact with AI and, consequently, that the perception of choice (or lack of it) plays a key role in their assessment of (and satisfaction with) the interaction. Moreover, factors such as how easy it was to find information about alternative contact options, or whether they were able to get out of conversation loops with the chatbot, or how long it would take to access a human agent vs the chatbot option, all shaped the extent to which customers perceived that they had had a choice in using the chatbot (i.e., voluntary interactions) or not (i.e., forced interactions).

Next, we conducted two experiments to explore how perceived voluntariness, error type (process vs outcome), and error severity (high vs low) influence customer dissatisfaction and blame attribution. Figure 1 provides an overview of the experimental setup.

Key results

=> Impact of Voluntary vs Forced Interactions on dissatisfaction and blame attribution

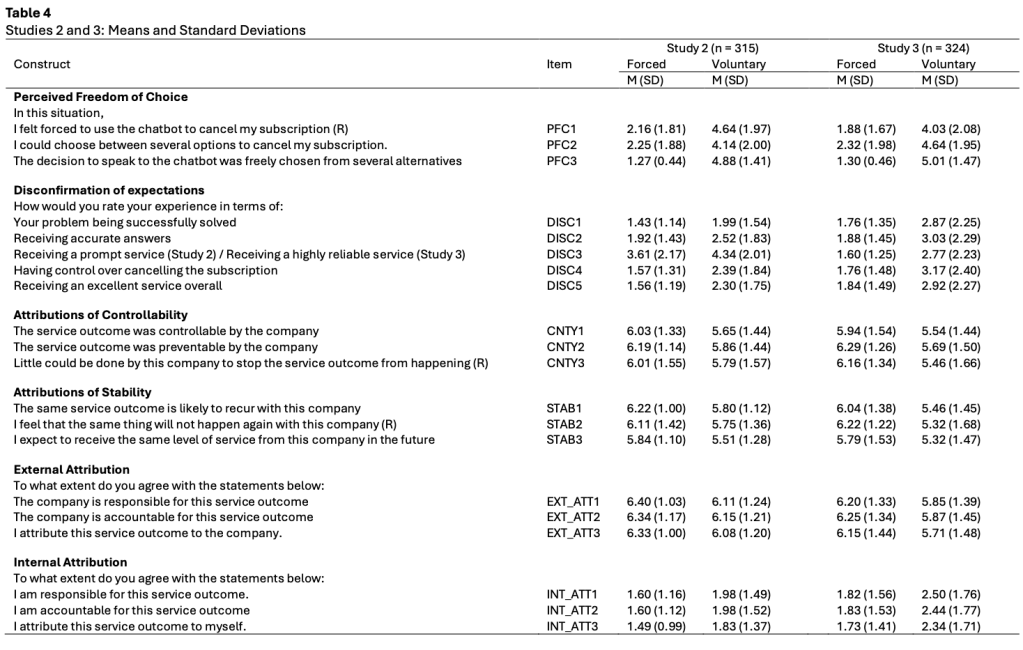

In all experiments, forced interactions resulted in more customer dissatisfaction than voluntary interactions. That is because when customers are forced to interact with the chatbot, they have higher expectations about how it will work, and, subsequently, are more disappoint when it doesn’t. Customers in forced interactions also have a higher likelihood than those in voluntary ones of blaming the firm for the problem, as opposed to blaming themselves. Table 4 summarises the results.

=> Impact of Error Type and Severity for Voluntary vs Forced Interactions

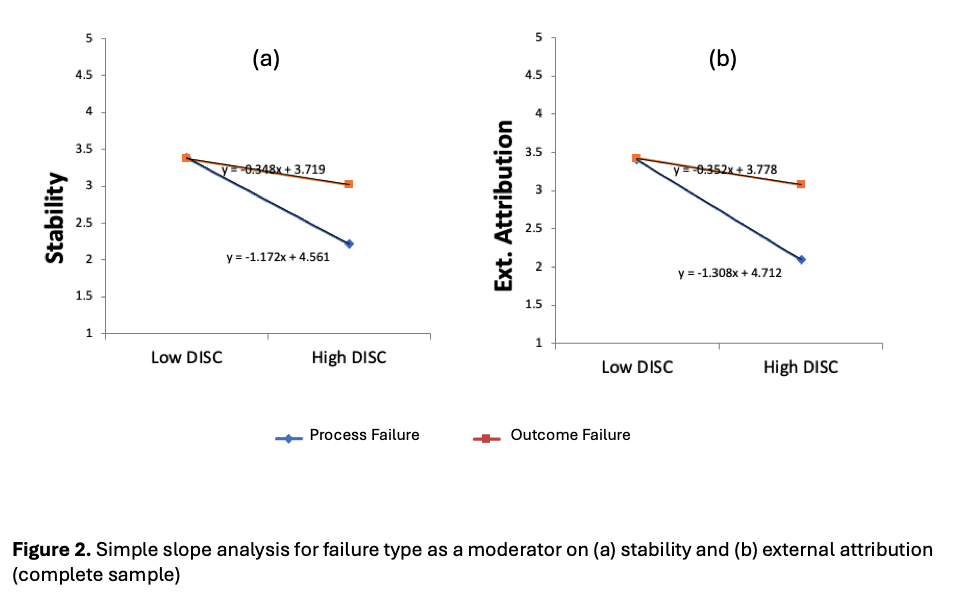

In the case of voluntary interactions, customers are more likely to blame the company in the case of process failure vs outcome failure (see figure 2); and in the case of failures with severe consequences vs low consequence (see figure 3).

However, in the case of forced interactions, this differentiation was not present. Instead, customers perceived all types of failures as equally problematic. It seems that the nature of forced used of chatbots leads customers to always blame the company for all problems experienced, regardless of whether they related to the process or the outcome failures, and resulted in small or big failures.

What do these results mean for managers considering the integration of chatbots in customer service roles?

- Ensure that alternative channels are available and prominently displayed to enhance perceived choice – Companies should ensure that there accessible and clearly sign-posted alternatives to chatbot interactions, such as human agents, e-mails or other digital channels. For example, the chatbot should offer the option of transferring to a human agent at any point, or quick-access buttons for escalating issues. Prominently displaying other options can reduce the feeling of being forced to use the chatbot, enhance perceived choice, and attenuate dissatisfaction with the problems that may occur.

- For voluntary chatbot interactions, focus on minimising process errors with significant consequences – Firms should prioritise the elimination of errors in the process and those likely to have significant consequences for the customers. Moreover, they should develop tailored response strategies based on the nature and severity of failures. In particular, they need to apologise and repair the situation quickly in, the case of process and highly consequential errors; whereas they can focus on improving chatbot functionality or options’ navigation in the case of output and low severity problems.

- For forced chatbot interactions, opt for chatbots with specific and clearly articulated functionalities – Companies should design chatbots with specific functionalities and tailored to different contexts (e.g., chatbot for customer orders), rather than generalist ones. Moreover, they need to carefully manage customers’ expectations of the chatbots’ capabilities and limitations. For instance, they could use imagery, onboarding and conversational prompts to emphasise that a chatbot can, for instance, accept and track orders, but can’t process returns.

- For forced chatbot interactions, implement hybrid support models that enable escalation to a human, to relieve the negative perceptions associated with lack of autonomy – Chatbots may serve as the first point of contact in forced interaction models, but there needs to a clear escalation option when problems start to occur. This approach provides a safety net for more intricate queries, ensuring that users do not feel ignored by the system. Adopting such models could also allow companies to use customer data and feedback to adapt responses dynamically, offering increasingly personalized experiences over time.

Our key message is that customer satisfaction with chatbot interactions depends not just on the technology itself, but also on how it is implemented. Providing clear alternatives, managing expectations, and addressing key failure points can make a significant difference when things, inevitably, go wrong.

The title of the paper is “When AI-chatbots disappoint – The role of freedom of choice and user expectations in attribution of responsibility for failure”. It was published in the journal “Information Technology & People”.

.

2 thoughts on “New paper: Forced vs Voluntary Chatbot Use: How It Shapes Customer Satisfaction and Blame”