Artificial intelligence (AI) is revolutionizing customer interface through chatbots, voice assistants, and other conversational agents. As these technologies become more human-like in how they respond to our queries, even adapting to specific speaking styles, it becomes even more important to understand consumer perceptions and responses to these interactions.

I want to share with your three papers that I have come across recently, and which provide valuable insights for companies looking at using AI-based agents in customer interactions. The first two papers both demonstrate the power of carefully designed language and vocal features to enhance perceptions of chatbots and voice assistants. The third one complements these two, finding that empathy based messages improve satisfaction after AI failures.

Here is a bit more information about each paper.

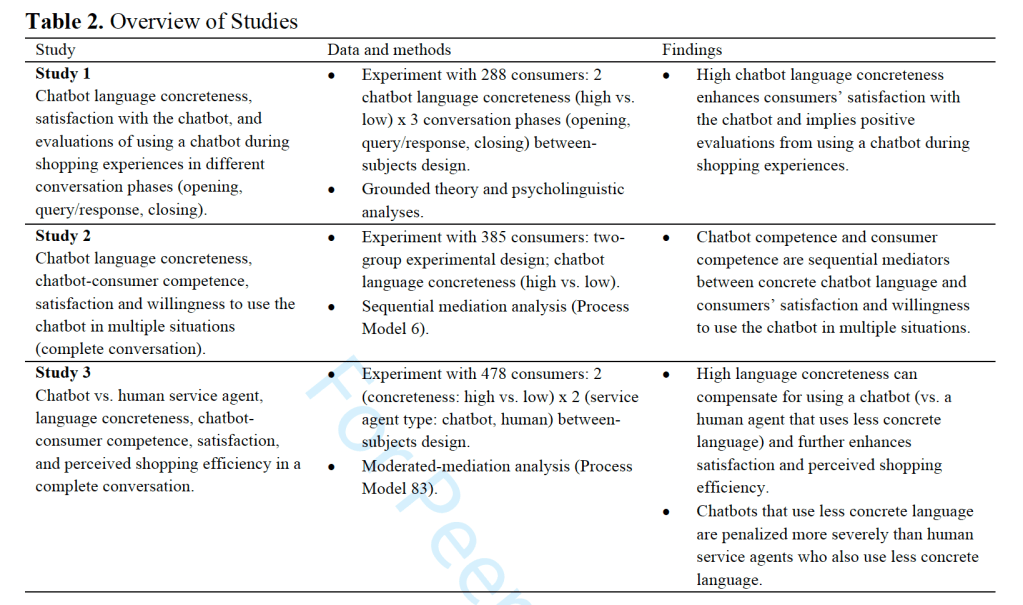

The first paper I want to highlight is by Jano Jiménez-Barreto, Natalia Rubio and Sebastian Molinillo, entitled “How Chatbot Language Shapes Consumer Perceptions: The Role of Concreteness and Shared Competence”. Through a series of experiments, the team investigated the impact of chatbot language on consumer perceptions. They found that when chatbots use more concrete and specific language, it enhances consumers’ satisfaction and their willingness to use the chatbot for multiple purposes. The researchers explain this effect through a perceived competence mechanism, whereby concrete chatbot language signals competence, which consumers then assimilate into their own feelings of competence in interacting with the chatbot.

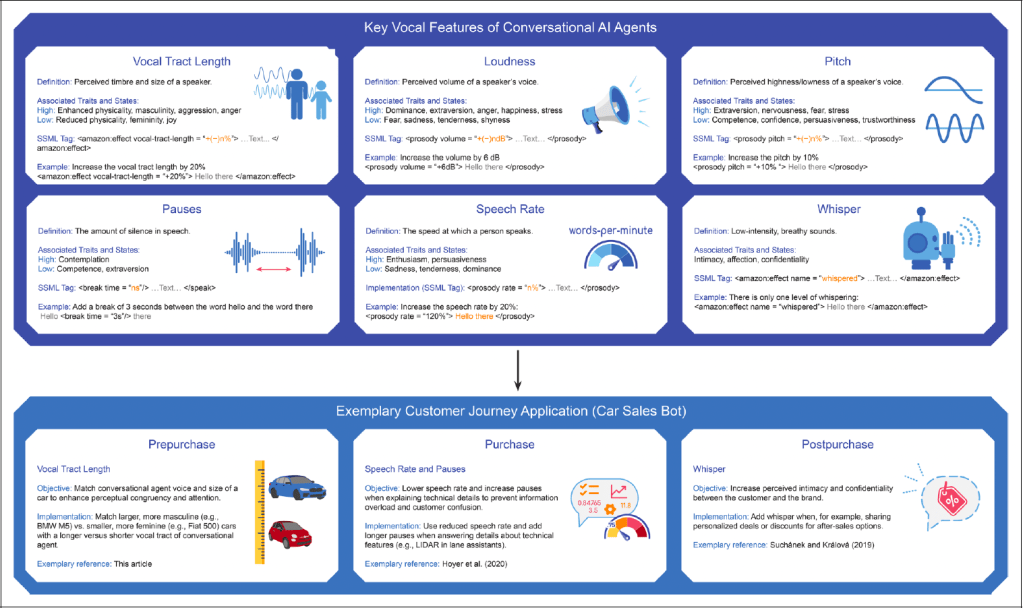

The second paper is by Fotis Efthymiou, Christian Hildebrand, Emanuel de Bellis and William H. Hampton, and is entitled “The Power of AI-Generated Voices: How Digital Vocal Tract Length Shapes Product Congruency and Ad Performance”. This team, too, used a series of experiments. Efthymiou and their colleagues investigated how modulating the vocal tract length (VTL) of an AI voice assistant shapes consumer perceptions. They found that increasing VTL led consumers to perceive the AI agent as larger and more masculine, while decreasing VTL had the opposite effect. Further, a longer VTL enhanced the perceived congruency between the AI voice and stereotypically masculine products, while a shorter VTL increased perceived congruency with feminine products. This research “indicates the potential risk of a one-size-fits-all strategy for developing AI-powered conversational agents (…) Companies are advised to think more systematically about the vocal design of AI-powered conversational agents as opposed to using off-the-shelf alternatives” (page 12).

Together, these two studies agree that AI attributes signalling competence – be it in terms of the content or the style of the interaction – are critical for positive consumer responses. However, these papers also reveal complex effects depending on contextual factors.

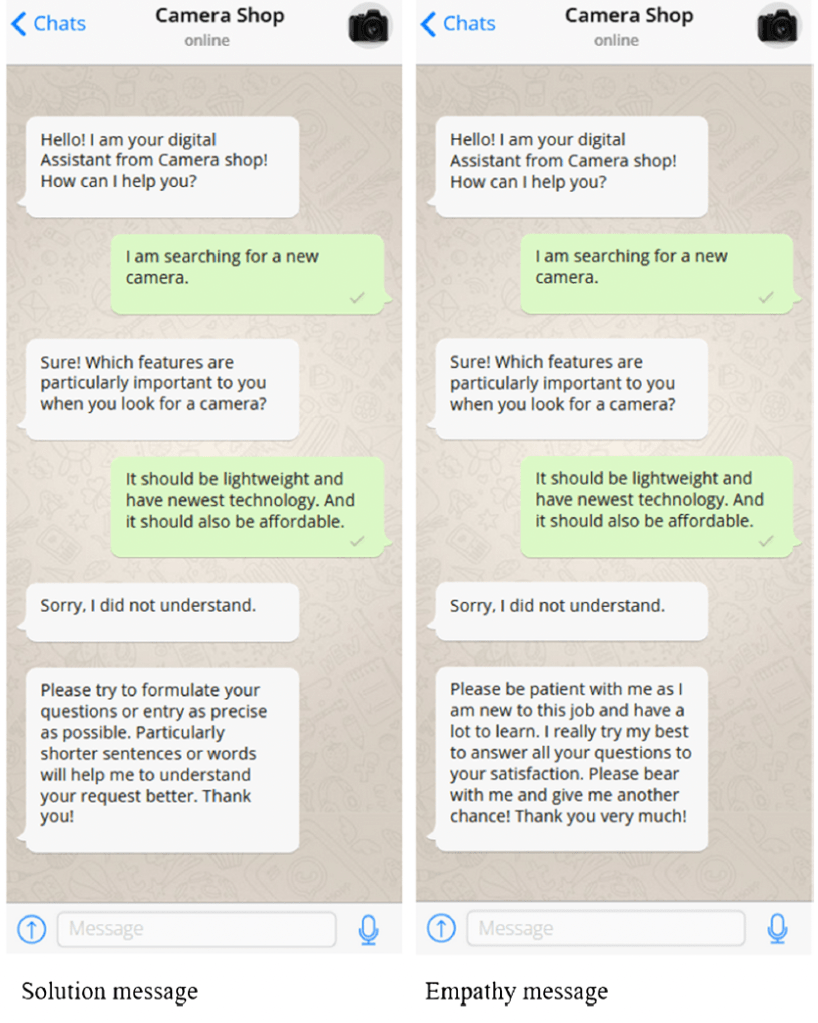

The third study is by Martin Haupt, Anna Rozumowski, Jan Freidank and Alexander Haas. It is entitled “Seeking empathy or suggesting a solution? Effects of chatbot messages on service failure recovery”, and, like the first paper, it examines the effect of chatbot language. But, like second paper, it looks at a specific context: in this case, recovery from service failure. The research team compared messages that sought user empathy versus messages that suggested a solution. They found that the first type (user empathy messaging) elicited images of warmth, while the second one (solution messaging) elicited images of competence. Both perceptions are helpful in terms of increasing post-failure satisfaction and reuse intentions.

However, which type was preferred depended on the circumstances of the failure. Namely:

- In the case of repeated failures, empathy messages are effective, but not solution ones.

- In the case of service failures perceived to be caused by the chatbot, empathy messages are acceptable. However, it is actually detrimental to use solution-focused messages.

- In the case of service failures perceived to be caused by the users themselves, solution-based messages do work. Empathy messages one are also acceptable.

That is, “an apology and request for understanding is “always possible” and a less critical approach compared to the solution message, and rather preferable when failure attribution remains unclear” (p.56).

Taken together, these three papers show that we can’t just consider functional aspects of the AI interface. Rather, AI interfaces must be thoughtfully designed in order to ensure customer satisfaction and retention, accounting for consumers’ inferences based on the tone used and contextual factors.

They also show the importance of human-centric insights for effective technology use.

One thought on “Leveraging AI in Customer Interfaces: Insights from Consumer Perception Research”