Chatbots are everywhere, and used in every industry. They promise to improve customer service, by offering 24-hour service and quick answers, at a fraction of the cost of their human counterparts.

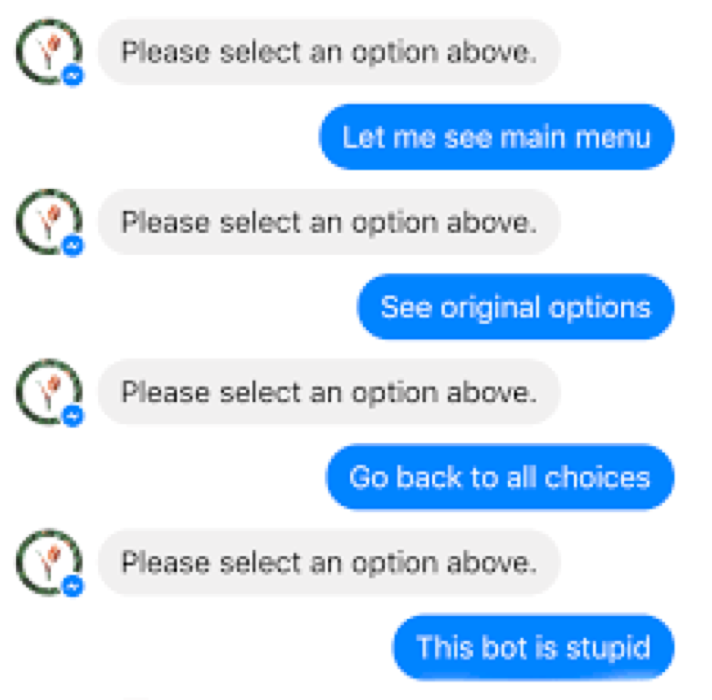

Reality is, however, less… polished.

Even a bit frustrating.

In the paper “The dark side of AI-powered service interactions: exploring the process of co-destruction from the customer perspective”, Daniela Castillo, Emanuel Said and I examine the sources of customer frustration with AI-powered chatbot interactions. To be clear, not all chatbots are powered by artificial intelligence – many will simply follow a set of rules, and deliver pre-programmed answers. AI-powered chatbots, on the other hand, have the ability to learn from interactions, and adapt their behaviour depending on customers’ responses.

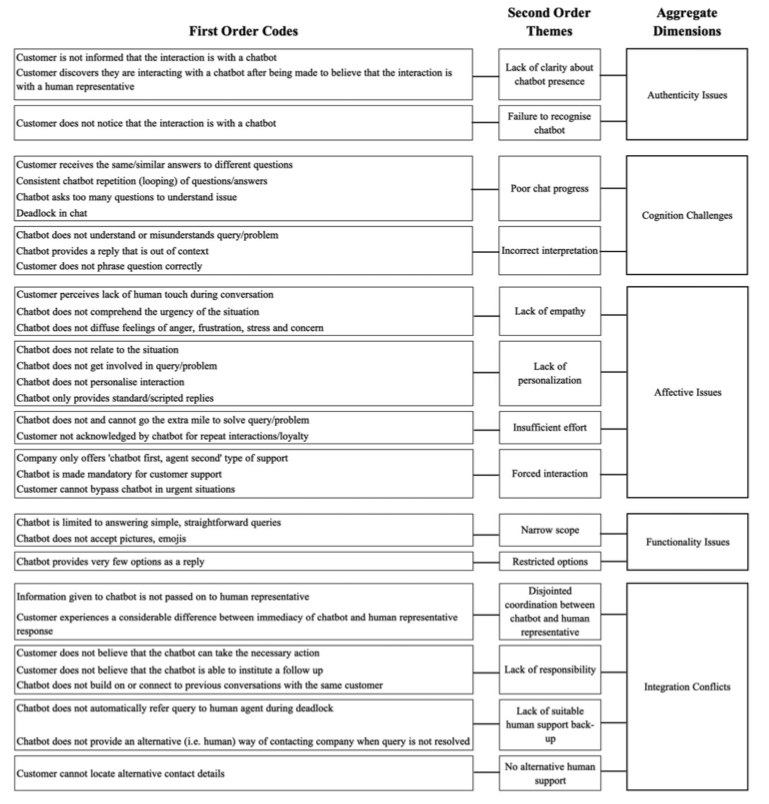

We identify five types of factors that can destroy value for customer, when interacting with chatbots.

Authenticity issues

This problem occurs when the customer is not sure whether they are interacting with a chatbot or a human customer service assistant. It is a source of dissatisfaction because the customer feels deceived, and is unsure about how to approach the interaction, and how much effort is expected.

Cognition challenges

This problem occurs when the chatbot struggles to interpret what the customer needs or wants, and progress is slow. It is a source of dissatisfaction because the customer feels that they can’t control the interaction and this generates a number of negative emotions, such as frustration, anger, agitation or upset.

Affective issues

This problem occurs because, unlike human customer support assistants, the chatbot lacks empathy. It is a source of dissatisfaction because the impersonal interaction leaves the customer feeling undervalued, and detached.

Functionality issues

This problem occurs because the chatbot can only assist with a restricted range of issues and types of queries and, thus, is deemed as being of limited assistance. It is a source of dissatisfaction because it is seen as a waste of time, as the customer either needs to repeat the query in alternative ways to try and get an answer, or needs to try and get the information elsewhere.

Integration conflicts

This problem occurs when there is loss of information during an interaction with the chatbot, or during the handover to a human customer support assistant. It is a source of dissatisfaction because it causes frustration, it is seen as a waste of time, and the lack of traceability and transaction memory causes anxiety.

These effects can be cumulative. For instance, the feelings of frustration over limited cognitive abilities may be amplified when the customer realises, posteriorly, that they have been interacting with a chatbot; or when the conversation is handed over to a member of staff, but all details of the conversation have been lost.

This insight is important because it addresses a knowledge gap regarding the use of AI applications in customer service settings. Moreover, it focuses on negative experiences, which can have a disproportionate effect on consumers’ evaluation of their interactions with a firm on and their subsequent behaviour, and because negative customer experiences are different to recover from.

Based on the findings from this study, we recommend that:

It is important for managers to realize that when AI applications, such as chatbots, are introduced to the frontline, customers view such applications as a substitute for human (employees). As a result, customers hold similar (…) expectations regarding service levels. In light of this, it is important for service providers to help customers understand any limitations inherent in the AI application and to ensure that the chatbot explains the process to adopt when faced with such limitations so as to avoid customer resource loss. More precisely, it is important for service providers to understand that customer queries can vary significantly in their degree of complexity and involvement. While AI chatbots can easily tackle simpler queries, problems arise when they face more complex questions. Service providers should first ensure that the question’s degree of complexity is identified as soon as possible, and then offer, if the question is determined to be complex, a clear and seamless chat transfer to a human support representative as early on in the process as possible.

Although chatbot disclosure at the start of an interaction may negatively prejudice customers against the effectiveness of such chatbots (…), our findings convey the negative impact of perceived deception by service providers. Customers (…) may erroneously judge a human (assistant) to be a chatbot, or vice versa, when the (…) identity is not disclosed. Service providers are therefore encouraged to clearly advise customers of the identity of the (assistant) to avoid feelings of deception and distrust. Such a notification can be offered at the start of the interaction or even at the end (…). (D)isclosing the presence of a chatbot may be regulated or considered standard practice in the near future, in light of ethical concerns.”

The paper reporting this study was published in The Service Industries Journal. This link provides access to a limited number of free copies. You can also find a free, pre-print version of the paper, here.

8 thoughts on “Sources of customer dissatisfaction in AI-powered service interactions”